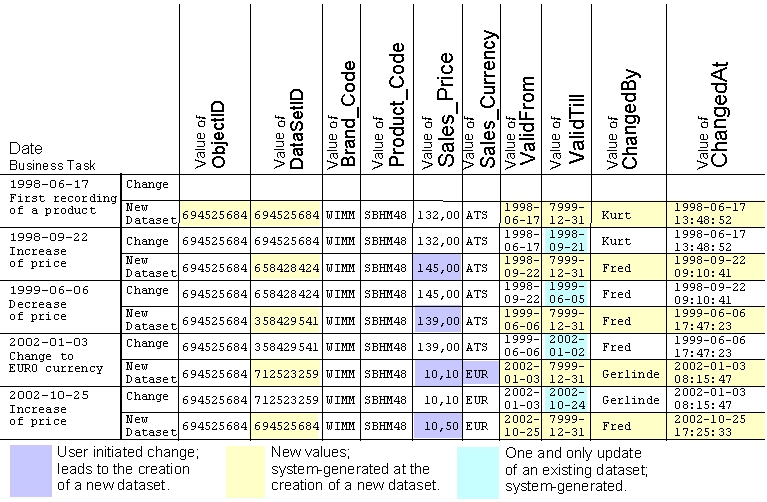

ObjectID

(DOUBLE)

Surrogate of an object which is known to an user

bei the 'user-known unique identifier' (Brand_Code and Product_Code

in the above example).

The ObjectId stays the same over time and

'ties together' all DataSets over time.

Technically it is the

DataSetId of the first dataset which was entered for a certain

'user-known unique identifier'.

DataSetID

(DOUBLE)

Primary key for the dataset as requested by the

database-system.

This attribute is generated by a random-number

generator because most database-systems honor a widespread spectrum

of the primary key of a table with a better performance.

ValidFrom

(DATE)

Date, from which on a change of an attribute is

valid.

At the time of writing this document (July 2006) the

design was, that the change of the validity is valid from midnight

on.

The exact time (down to 1/1000 of a second) is recorded in

the attribute ChangedAt.

ValidTill

(DATE)

Date, until which the previous value of the changed

attribute was valid.

ChangedBy

(VARCHAR(254))

User who initiated the change of an

attribute.

ChangedAt

(TIMESTAMP)

Timestamp,

when an attribute was changed.

CreatedBy

(VARCHAR(254))

User who entered the first dataset for a

certain 'user-known unique identifier'

CreatedAt

(TIMESTAMP)

Timestamp when the first dataset for a certain

'user-known unique identifier' was entered.

The

space did not explode.

In the first week I monitored very

often the grow of the database.

I was glad that it did not

explode, but the growth was more or less caused by the business of

taking orders, writing delivery notices and invoices.

Master

data make up only 20 % of all data.

Master data (for clients,

delivery adresses, products) makes up only ca. 20 % of all data.

So

the necessary changes to not contribute so much to growth caused by

the strategy of implementing the history of objects into

database-tables.

The changing of master-data is less than I

expected; it is limited to an occassional change of a clients

address or phone-number, correcting a typo or (most of all) a change

of the purchase- or sales-price of a product.

Dynamic

data is very rarely changed.

Dynamic data (orders, delivery

notices, invoices) makes up the mass of stored data.

As I could

see, there are very infrequent corrections which have to be recorded

in the history.

These corrections are mainly that a customer

cancels an order or the amount of an order position was typed wrong

and had to be corrected.

The

worst case: Quantity on Stock.

The most read and updated

database table is the one with the Quantity on Stock.

As there

was the requierement to find to each product the order, delivery

notice and invoice where it appears, the ObjectID of the

order-position was stored together with the quantity that went from

the stock to the order.

In exchange, there are no CreatedBy,

CreatedAt, ChangedBy and ChangedAt attributes in the 'Quantity on

Stock' table.

These values have to be retrieved from the

'Order-Position' table when the history of the quantity on stock of

a product is requested.

I

am not rich by the commission of harddisk manufacturers –

Unfortunately.

But I think

that the system has shown its proof.

Although, there are some

areas (e.g. the Quantity on Stock) where it has to be adapted.